Dangerous Dashboard Design

I recently had the great pleasure of working on a dashboard project using PerformancePoint in SharePoint 2013 with a great team of individuals. We had to overcome several obstacles during the process and I thought it would be a good idea to document how we accomplished some of our goals with the hope that I can spare someone else (or my future self) from having to go through the same pain. Our dashboard is currently in closed beta and we will be releasing it to the wild in the next couple of weeks.

Dynamic Active Directory Security Without Kerberos

The biggest challenge we faced was how to implement dynamic active directory security without using Kerberos. I had applied dynamic security to several Analysis Services databases in the past; but, had never followed that implementation through to PerformancePoint dashboards. This challenge is more difficult in that respect because within a dashboard you will typically have more sources of data than just an analysis services database. We had SSRS reports that ran against our ODS and our relational data warehouse. You can read more about how we achieved this in my recent blog post, PerformancePoint 2013 Dashboards: Applying Dynamic Security using CustomData and EffectiveUserName.

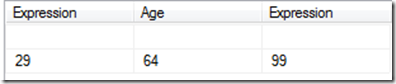

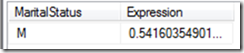

Our solution required three roles. Two roles for the managers who would be restricted to seeing only their employees. One role for the PerformancePoint objects which uses the CustomData function in the security and another for the SSRS MDX based reports and Excel Services based reports that uses the UserName function. Finally we needed a separate unrestricted role for the managers and district leaders that could see all of the data. This allowed us to keep our bridge table relatively small. Otherwise every person that needed to see every person would require about 2,500 rows in the bridge table.

During develoment, I mistakenly thought we could create the entire dashboard for the restricted users, save a copy and rename it to unrestricted, change the data source to use the unrestricted role and be on our way. I was woefully mistaken. Apparently PerformancePoint uses some sort of invisible GUID or something for the data source name. Worse yet, you can’t change the data source for existing Analytic charts, grids, and scorecards. So we couldn’t make a copy of the objects, rename them, and point them to a different data source. We actually had to use two different site collections for the restricted and the unrestricted dashboards!

I Know Who You Are and What You Did Last Quarter

Once we had the dynamic security in place we wanted the dashboard to automatically know who you are. We accomplished this by adding an attribute to our person dimension that would hold the active directory account name. I use the word person in it’s most generic sense. A person can be a vendor, customer, employee, manager, or sales person. I created a MDX filter in PerformancePoint and used the following MDX code to achieve this:

STRTOSET(“[People].[Active Directory Login].&[” + CUSTOMDATA() + “]”)

I then added this attribute to any analytic charts, grids, or scorecards in the background quadrant of the design canvas so that the report could be filtered by it. I connected the filter to the report as one typically would and later hid the filter using the edit web part interface within the SharePoint 2013 site.

Save the Trees

My good buddy Michael Simon used some fancy MDX to set the trailing six quarters for an analytic bar chart only to discover that we lost the ability to right click and get the decomposition tree and all the other neat PerformancePoint features. He discovered a clever work around using dynamic named sets. We had our right clicks back.

Yesterday… Not Such an Easy Game to Play

Another challenge we faced is a very common one. How to get the dashboard to automatically present data as of yesterday. Typically this wouldn’t be a big deal; but, in our case we use a reporting calendar that doesn’t include Saturdays, Sundays or Holidays. Any sales that occur on those days roll forward to the next business day. If you don’t have such a dilemma you can use either of these two snippets to accomplish this feat:

1. STRTOSET(“[Date].[Calendar].[Date].&[“+ VBAMDX.Format(VBAMDX.Now()-1, “yyyyMMdd”) +”]”)

2. STRTOSET(“[Date].[Calendar].[Date].&[“+ VBAMDX.Format(VBAMDX.Now(), “yyyyMMdd”) +”].Lag(1)”)

In our case, this wouldn’t work. If the user wanted to look at the dashboard on a weekend or a holiday they would get an error.

I initially tried to set the default member of the dimension to yesterday using MDX. I ran into a problem in that once I set the default member for the date dimension in the cube I could no longer put the date dimension in the report filter area of an Excel Pivot table. It would no longer work and would just show yesterday’s data. See this link for more information about that issue: Default Member Trap (be careful of what you filter)

My next strategy was to add an attribute to our Gregorian date dimension called Last Business Date and update the corresponding relational table with this information. I then attempted to parse together the information to get the correct reporting date. We have two date dimensions in our cube. One is a regular calendar date dimension, the other one is a fiscal reporting date dimension that doesn’t include weekends or holidays. Our fact table has keys for both. You can see my post asking for help from the BIDN community here:

Before I received the kind reply from mreedsqlbi, I found another way to accomplish what I needed. This post helped me find another solution: Member Does Not Exist in MDX Calculations

I created four dynamic named sets in the cube called, OneDayAgo, TwoDaysAgo, ThreeDaysAgo, and FourDaysAgo.

Here is the code for OneDayAgo:

iif(iserror(STRTOMEMBER(“[Date – Reporting].[Date].&[” + VBAMDX.Format(VBAMDX.Now()-1, “yyyyMMdd”) +”]”)),{},STRTOMEMBER(“[Date – Reporting].[Date].&[” + VBAMDX.Format(VBAMDX.Now()-1, “yyyyMMdd”) +”]”))

For each of the other named sets, I just changed the –1 to a –2, –3, and –4 respectively.

The code for my MDX filter used the dynamic named sets to figure out if the member exists:

IIF([OneDayAgo].Count > 0

,STRTOMEMBER("[Date - Reporting].[Date].&[" + VBAMDX.Format(VBAMDX.Now()-1, "yyyyMMdd") +"]")

,IIF([TwoDaysAgo].Count > 0

,STRTOMEMBER("[Date - Reporting].[Date].&[" + VBAMDX.Format(VBAMDX.Now()-2, "yyyyMMdd") +"]")

,IIF([ThreeDaysAgo].Count > 0

,STRTOMEMBER("[Date - Reporting].[Date].&[" + VBAMDX.Format(VBAMDX.Now()-3, "yyyyMMdd") +"]")

,IIF([FourDaysAgo].Count > 0

,STRTOMEMBER("[Date - Reporting].[Date].&[" + VBAMDX.Format(VBAMDX.Now()-4, "yyyyMMdd") +"]")

,STRTOMEMBER("[Date - Reporting].[Date].&[" + VBAMDX.Format(VBAMDX.Now()-5, "yyyyMMdd") +"]")

)

)

)

)

UPDATE:

This works better because it works in Dev which doesn’t always have data for yesterday.

TopCount(ORDER( NONEMPTY( {[Date – Reporting].[Date].Members

– [Date – Reporting].[Date].[All]}

, [Measures].[Sales Amt] )

, [Date – Reporting].[Date].CurrentMember.MEMBER_KEY

, DESC )

,1)

UPDATE:

At one point during Beta testing, I was asked to present the user with a date dropdown that defaults to yesterday. This was later scrapped because it required a server wide change within the Central Administration Application Settings for PerformancePoint in which we had to set the user selected filters to expire every day.

For anyone who is interested, I got that to work by removing the TOPCOUNT from the MDX formula filter in Dashboard Designer:

ORDER( NONEMPTY( {[Date – Reporting].[Date].Members – [Date – Reporting].[Date].[All]},

[Measures].[Sales Amt] ), [Date – Reporting].[Date].CurrentMember.MEMBER_KEY, DESC )

This only shows dates that have data, excludes the all member, and sorts it in descending order. The result is that it defaults to yesterday but allows you to change it.

UPDATE

I think I’m crazy; but, I could have sworn the dashboard was showing Friday’s data on Monday. Looking at the code, I don’t see how; but, I am amazed no one has pointed it out.

I had to modify the code to eliminate Today’s date from the data set.

TopCount

(

Order

(

NonEmpty

(

{

[Date – Reporting].[Date].MEMBERS

– [Date – Reporting].[Date].[All]

– STRTOMEMBER(“[Date – Reporting].[Date].&[” + VBAMDX.Format(VBAMDX.Now(), “yyyyMMdd”) +”]”)

}

,[Measures].[Sales Amt]

)

,[Date – Reporting].[Date].CurrentMember.Member_Key

,DESC

)

,1

)

Filters

Another challenge we faced was how to have MDX filters control relational reports. This was pretty easy. Just use the DisplayName attribute of the filter instead of MemberUnique and let the report figure out how to use that information. We were unable to use multi-select MDX member selection filters. When we passed these as DisplayValue to our relational SSRS reports it would only pass the first selection. We were unable to overcome this limitation the way we would have liked. We ended up not using multi-select filters and instead had filters that only contained the parent level of the hierarchy. In other words, our filter contained managers and the report would display the managers employees. We lost the ability to have the user only select some employees of that manager; but, not others. To my knowledge, there is not a way to pass MDX multi-value filters using the DisplayValue property to relational SSRS reports.

Staying On Top of Reporting Services

One aggravation we experienced was that the column headers on the SSRS reports would not stay at the top of the web part when the user scrolled through the report part. There are many blog posts out there on how to achieve this; but, within SharePoint 2013 it would work in Firefox and Chrome; but, not in Internet Exploder (9 or 10.) I had to workaround this issue by sizing the report so it wouldn’t scroll, and instead display the toolbar and have the user page through the data instead of scroll. Which in my opinion, looked nicer anyway.

I’ve got to mention one gotcha we experienced during our dashboard design endeavor that I had experienced many years ago. I thought I had blogged about it in my What’s New BI-wise section; but, I couldn’t find it so I must have dreamed I had blogged about it. Any way here are some words of wisdom:

When you are creating an SSRS MDX based report and need to make a customization to the underlying MDX that is created using the easy to use drag and drop interface, make a backup or better yet, check it into source control and label it ‘Pre-MDX customizations’ or something very clear like that.

Once you have made those customizations to the MDX (to allow that member property to be displayed for example) you can’t go back! No longer can you simply delete all your parameters and add different ones easily. Everything has to be done through hand coded MDX from that point forward. It is far easier to design your report as much as possible, make a backup or labeled source control check-in, and then make your MDX customizations. If you need to add/remove fields or parameters in the future it is easier to go back to that pre-MDX customized version and do it using the interface and then re-do the MDX customization than to work with it outside the interface.

Scorecards Don’t Always Score

Scorecards are really cool gadgets to use in PerformancePoint dashboards because you can use them to control other objects. Filters and scorecards are the only way to control objects in the PerformancePoint dashboard. When you see a scorecard functioning well inside a PerformancePoint dashboard it looks really cool. As you click on the various members within the scorecard you can have charts and data change according to the context.

We could not use scorecards the way we wanted because we simply had too many members in our dimension for them to render quickly enough. They don’t work as you would expect. Doing the same type of thing in an analytic grid or Excel services report is a piece of cake and renders very fast. For whatever reason, regardless of the fact that I filtered the scorecard on the manager, it would insist on rendering every employee in the company and then hiding the ones that weren’t children of the selected manager. In our case that was a little less than 5,000 members. I reduced this set to about 2,800 by deleting employees that weren’t used as keys in any of our fact tables; but, it was too late. The powers that be had already decided that scorecards stink due to not only the rendering speed; but, the hoops we had to jump through to develop one using that many members. Even in the developer’s IDE, Dashboard Designer, it would pull back every single representative regardless of being filtered on a single parent member. It would display those in a red font indicating that they would be hidden; but, the time it took the designer to render made you think it had crashed. We actually had to build a cube containing a very small subset of the data in order to design the scorecard the way we wanted.

From a pure developer’s standpoint it seems that the scorecard design process in PerformancePoint needs some maturing. Some configurations that are set within the wizard for creating an Analysis Services based scorecard are simply not editable outside of the wizard. One would need to take screenshots of how the scorecard was developed to document the process in the event that a change was ever needed to one of those initial configurations.

While I’m on the subject of scorecards, another gripe we had about the way scorecards work is that any time you have more than one set of KPI actuals and targets within a scorecard it displays them with all of the actuals on the left and trends or targets on the right. In other words, if I have a sales actual and goal KPI on the same scorecard as a gross profit actual and goal, I can only show them as actual sales, actual gross proft, target sales, target gross profit. I would like some control to show the targets next to their corresponding actuals.

Easy Migrations

One new feature of SharePoint 2013 that I really like is the new way it handles exporting and importing a dashboard project. In previous versions of SharePoint, I had to create long lists of customizations that had to be done to the web parts after Dashboard Designer had deployed the dashboard. Things such as hiding filters, resizing objects, and changing the chrome settings had to be done over and over again every time we made a change to the dashboard that required a re-deployment. Moving the project from the development environment to the QA environment and finally to the production environment was a manual tedious process. Furthermore, these customizations were not stored in source control. I was pleased as punch to discover the export/import feature of SharePoint 2013 solves both problems. I can extract the resulting .CMP file using a cab extractor and put the entire project into source control and I don’t have to repeat those customizations over and over again.

Accepting the Unacceptable

I’ve been creating dashboards since PerformancePoint 2007. Make no mistake, I do not consider myself an expert at dashboard design. I would however consider myself pretty advanced in using the Microsoft BI stack to produce great dashboards quickly. I really like using PerformancePoint. If you are willing to accept some of the quirks w/ PerformancePoint, it is a great tool to allow a developer to very quickly produce meaningful dashboards with a lot of functionality right out of the box. When you start getting very particular about the way things look it gets more complicated. For example, I can only show the legend at the top or to the right of my analytic charts. I can’t show them on the left and I can’t show them on the bottom. I can’t control the color of the bars in the bar charts. I sometimes joke that designing PerformancePoint dashboards is a great opportunity for someone who likes to say no. Sure, we can give you a bar chart w/ the colors you want or with the legend on the left; but, I have to do that in Reporting Services and we lose all of the cool right click functionality that is included in the analytic chart such as the decomposition tree.

Fifty Shades Of Gray Is Not Enough

I’ll never forget one project I worked on as a consultant with a very lovely lady who had designed her dashboard on paper before she had any idea of what PerformancePoint could and could not do easily. A simple thing like showing the total dollar amount at the top of the bar chart involved creating a separate reporting services web part that only showed that total and placing it above the chart. The end result was a really good looking dashboard that looked remarkably similar to what had been envisioned. It just took a lot of extra time to make it look exactly how it was imagined.

One other piece of advice I can give is to apply a lot of rigor in your testing. Make sure each page of the dashboard uses consistent nomenclature. Make sure that the numbers on all pages agree with each other. If one page says that gross profit is this and another says gross profit was that, you’re going to get a lot of questions from your user community. Be consistent in your color and number formatting as well. Are you going to display zeros or leave the cells blank? I personally prefer to leave the cells blank; but, it is more important that whatever you decide you stay consistent. Decide beforehand what screen resolution you are designing to. If everyone at your company has widescreen 17 inch monitors than it might be best to design your dashboard to look best at 1920×1080. If you need to show your dashboard on an iPad or a Surface than you better have one lying around. Good luck with that by the way. Consider which browser is your company’s standard. HTML5 or not, things look different in Chrome, Firefox, Safari and Internet Exploder versions 8, 9, 10, or 11.

Awwww…

As I wrap this up, I just want to give a big warm thank you to the other members of my team, Sherri McDonald and Michael Simon. A lot of hard work and long hours went into this project and it feels great to have professionals like them to ease the burden. It’s a much better experience than working solo. Three heads are much better than one and it feels great to have others throw rocks at your stuff and see where it breaks before the real critics get their hands on it.

Do you have a good dashboard design story? Did I leave a danger out? Please let us know in the comments.

——————————————————————————-

Other Dashboarding Resources

One person that I would consider an expert on dashboard design is Stephen Few. I recently read on his blog that a second edition of his book, Information Dashboard Design: The Effective Visual Communication of Data, is being released shortly. He mentioned in that post that one of the criticisms he received on the first edition that is addressed in the second edition is the lack of real examples of effective dashboards by real products. His explanation is that at that time there simply weren’t any products released that were capable of producing what he considered really good dashboard designs. Be sure to read his article Common Pitfalls in Dashboard Design for a great list of dashboard design gotchas.

Links

PerformancePoint 2013 Dashboards: Applying Dynamic Security using CustomData and EffectiveUserName

PerformancePoint – Effectively Disable Analytic Grid Right Click Select Measures Functionality

Time Intelligence Filters in PerformancePoint 2010

Cascading Filters in Performance Point Services dashboard using SharePoint 2013

Performance Point Relative Date Time Intelligence with current date time

How to make SSRS reports in a PerformancePoint dashboard ‘pop out’ or open in a new window.

Clutter, data overload put dashboard designs on path to failure

Data Visualization and Dashboard Design

Formatting your PerformancePoint Analytic Grid!

Using SSAS MDX Calculation Color Expressions

Time Intelligence Post Formula Filters

Set Default Value of PerformancePoint Filter – Note: Caveat to this tip- Once a user changes the default value SharePoint will remember the changed value and not reset the default value.

Filter Connection Quick Reference

——————————————————————————-

All my PerformancePoint links – Updated as I update them!

——————————————————————————-

PerformancePoint Gotcha! – “PerformancePoint Services could not connect to the specified data source.” When creating a new dashboard, you MUST do a save all right after you create your data sources otherwise you won’t be able to create anything that uses that data source.

——————————————————————————-

Gotcha! PerformancePoint Time Intelligence and SSRS

I was trying to use a PerformancePoint time intelligence filter to pass values to a SSRS report. I discovered that the MonthToDate, YearToDate, QuarterToDate syntax does not work with SSRS. Instead use Month.FirstDay:Day, Year:FirstDay:Day, Quarter.FirstDay:Day

——————————————————————————-

Zero to Dashboard- Intro to PerformancePoint

Come check out the PerformancePoint hotness! Mike will demonstrate the functionality in PerformancePoint services in SharePoint 2010 and show you how to quickly build some dynamic dashboards and reporting functionality your end users will crave.

http://pragmaticworks.com/Resources/webinars/WebinarSummary.aspx?ResourceID=327

——————————————————————————-

PerformancePoint Tip – Right clicking an analytic grid in the leftmost columns allows the user to select measures. Some people may not want that, so how do you disable that ability? PerformancePoint Services Application Settings – Select Measures Control – set maximum value to 0. (default is 1000).

http://technet.microsoft.com/en-us/library/ee620542.aspx

——————————————————————————-